video标签自动播放音视频并绘制波形图

html中的<video>标签可以用来播放常见的音视频格式,支持的格式包括:MP3、Ogg、WAV、AAC、MP4、WebM、AVI等,当然支持的格式也和浏览器和操作系统有关。这里以一个可以自动播放音视频并绘制波形图的页面为例说明一下<video>标签的用法。

video标签想自动播放,需要设置三个可选属性分别是muted、autoplay、controls,muted负责让音视频播放静音,autoplay让音视频自动播放,controls属性负责显示对应的控制菜单。除了通过html页面设置标签属性之外我们还可以通过js脚本来设置对应的属性,设置方法如下所示:

<!DOCTYPE html>

<html>

<head>

<title>播放音视频</title>

<meta charset="UTF-8">

</head>

<body>

<video id="myVideo" autoplay muted controls>

<source src="./mysong.mp3" type="audio/mpeg">

<!--source src="video.mp4" type="video/mp4"-->

Your browser does not support the video tag.

</video>

<script>

var videoElement = document.getElementById('myVideo');

// 自动静音播放

videoElement.muted = true;

videoElement.autoplay = true;

videoElement.controls = true;

</script>

</body>

</html>

浏览器为了防止页面自动播放音频干扰用户,不允许在用户没有进行交互操作的时候,网页自动以非静音的模式播放音视频。所以autoplay属性必须搭配muted属性一块使用。

如果想要绘制音视频播放过程中的音频波形图,我们需要拦截对应的音频上下文,分析绘制对应的音频数据。对应的实现如下所示:

<!DOCTYPE html>

<html>

<head>

<title>绘制音频波形图</title>

<meta charset="UTF-8">

</head>

<body>

<h1>绘制音频波形图</h1>

<video id="myVideo" controls>

<source src="./mysong.mp3" type="audio/mpeg">

Your browser does not support the video tag.

</video>

<canvas id="waveformCanvas"></canvas>

<script>

// 获取video元素和canvas元素

let video,analyser,ctx,canvas,audioContext,timerID,analyserNode;

video = document.getElementById('myVideo');

//播放的时候调用初始化操作

video.addEventListener("play",initWaveDraw);

//获取画布元素

canvas = document.getElementById('waveformCanvas');

ctx = canvas.getContext('2d');

function initWaveDraw(){

// 创建音频上下文

if(!audioContext)

{

audioContext = new(window.AudioContext || window.webkitAudioContext)();

analyser = audioContext.createAnalyser();

analyser.connect(audioContext.destination);

analyserNode = audioContext.createMediaElementSource(video);

analyserNode.connect(analyser);

timerID = setInterval(drawWaveform,200);

}

}

// 绘制波形图

function drawWaveform() {

// 获取波形数据

var bufferLength = analyser.fftSize;

console.log("drawing wave");

var dataArray = new Uint8Array(bufferLength);

analyser.getByteTimeDomainData(dataArray);

// 清空画布

ctx.clearRect(0, 0, canvas.width, canvas.height);

// 绘制波形图

ctx.lineWidth = 2;

ctx.strokeStyle = 'rgb(0, 255, 255)';

ctx.beginPath();

var sliceWidth = canvas.width * 1.0 / bufferLength;

var x = 0;

for (var i = 0; i < bufferLength; i++) {

var v = dataArray[i] / 128.0;

var y = v * canvas.height / 2;

if (i === 0) {

ctx.moveTo(x, y);

} else {

ctx.lineTo(x, y);

}

x += sliceWidth;

}

ctx.lineTo(canvas.width, canvas.height / 2);

ctx.stroke();

}

</script>

</body>

</html>

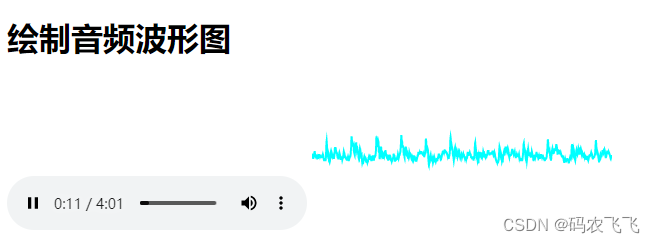

显示效果如下图所示:

如果想让播放器自动播放音频并放音,我们可以采用一些迂回策略,首先让播放器自动静音播放,然后设置一个定时器检测用户是否和页面发生了操作交互,如果产生了交互就播放音频并绘制波形图。(浏览器默认没有交互的时候不允许播放声音) 对应的实现如下所示:

<!DOCTYPE html>

<html>

<head>

<title>绘制音频波形图</title>

<meta charset="UTF-8">

</head>

<body>

<h1>绘制音频波形图</h1>

<video id="myVideo" controls>

<source src="./mysong.mp3" type="audio/mpeg">

Your browser does not support the video tag.

</video>

<canvas id="waveformCanvas"></canvas>

<script>

// 获取video元素和canvas元素

let video,analyser,ctx,canvas,audioContext,timerID, checktimerID, analyserNode;

var hasUserInteracted = false;

video = document.getElementById('myVideo');

//获取画布元素

canvas = document.getElementById('waveformCanvas');

ctx = canvas.getContext('2d');

video.muted = true;

video.autoplay = true;

video.controls = true;

// 监听键盘按下事件

function handleUserInteraction(){

console.log("user has interacted");

hasUserInteracted = true;

}

document.addEventListener('click', handleUserInteraction);

checktimerID = setInterval(checkMouseClick, 1000);

setTimeout(function(){ video.addEventListener("volumechange",handleUserInteraction);},2000);

//定时检测鼠标事件,开启带声音的播放

function checkMouseClick(){

if(hasUserInteracted)

{

initWaveDraw();

video.muted = false;

video.play();

clearInterval(checktimerID);

}

}

function initWaveDraw(){

// 创建音频上下文

if(!audioContext)

{

audioContext = new(window.AudioContext || window.webkitAudioContext)();

analyser = audioContext.createAnalyser();

analyser.connect(audioContext.destination);

analyserNode = audioContext.createMediaElementSource(video);

analyserNode.connect(analyser);

timerID = setInterval(drawWaveform,200);

}

}

// 绘制波形图

function drawWaveform() {

// 获取波形数据

var bufferLength = analyser.fftSize;

console.log("drawing wave");

var dataArray = new Uint8Array(bufferLength);

analyser.getByteTimeDomainData(dataArray);

// 清空画布

ctx.clearRect(0, 0, canvas.width, canvas.height);

// 绘制波形图

ctx.lineWidth = 2;

ctx.strokeStyle = 'rgb(0, 255, 255)';

ctx.beginPath();

var sliceWidth = canvas.width * 1.0 / bufferLength;

var x = 0;

for (var i = 0; i < bufferLength; i++) {

var v = dataArray[i] / 128.0;

var y = v * canvas.height / 2;

if (i === 0) {

ctx.moveTo(x, y);

} else {

ctx.lineTo(x, y);

}

x += sliceWidth;

}

ctx.lineTo(canvas.width, canvas.height / 2);

ctx.stroke();

}

</script>

</body>

</html>