Python爬虫 Fiddler抓包工具教学,获取公众号(pc客户端)数据

前言

今天来教大家如何使用Fiddler抓包工具,获取公众号(PC客户端)的数据。

Fiddler是位于客户端和服务器端的HTTP代理,是目前最常用的http抓包工具之一。

开发环境

- python 3.8 运行代码

- pycharm 2021.2 辅助敲代码

- requests 第三方模块

- Fiddler 汉化版 抓包的工具

- 微信PC端0

如何抓包

配置Fiddler环境

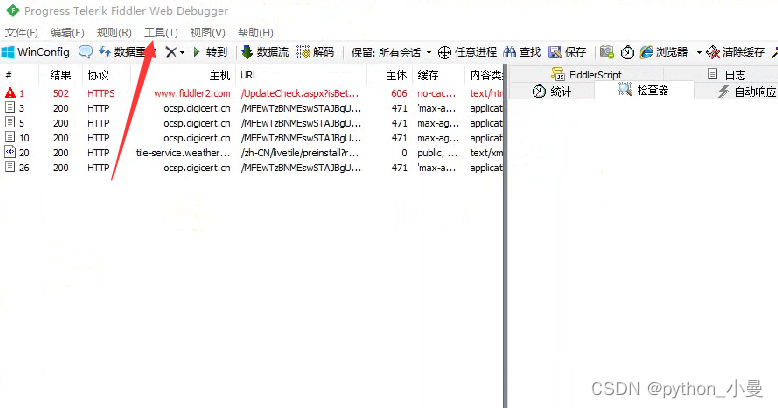

先打开Fiddler,选择工具,再选选项

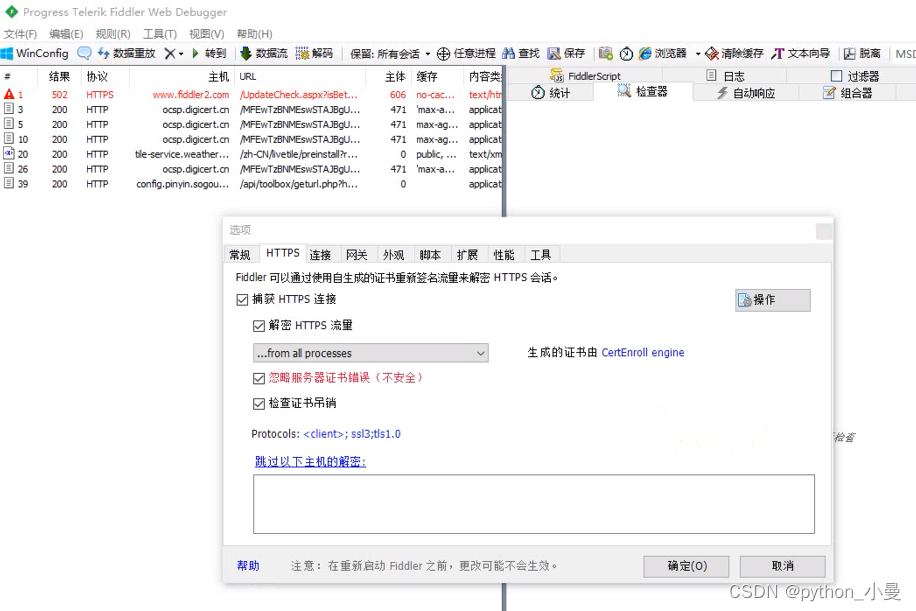

在选项窗口里点击HTTPS,把勾选框都勾选上

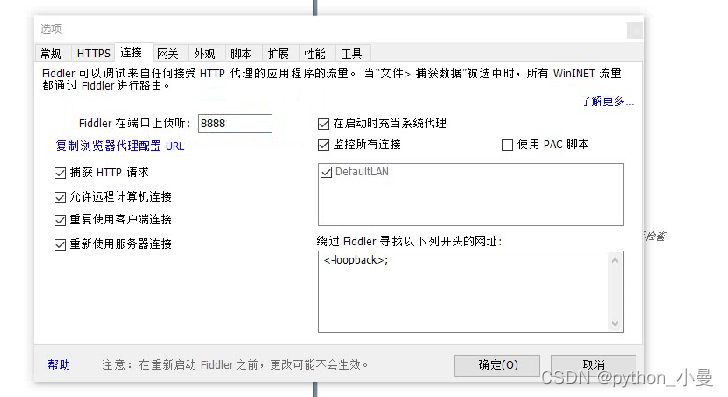

在选项窗口里点击链接,把勾选框都勾选上,然后点击确定即可

我们还需要在客户端把网络代理开启

地址:127.0.0.1

端口:888

抓包

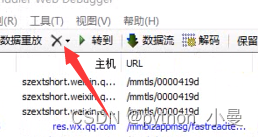

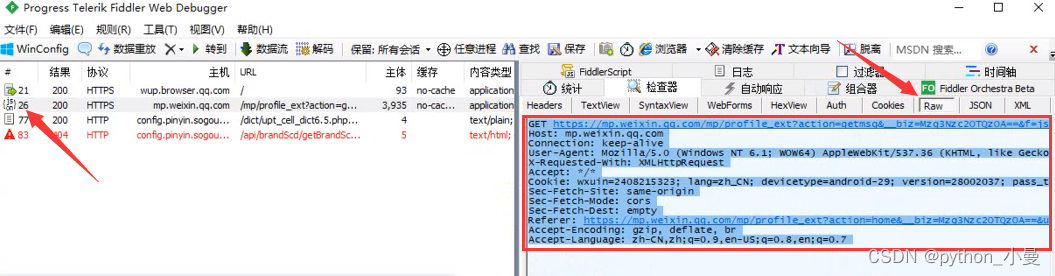

先登录,然后清空Fiddler里的数据,在选到你想要的公众号内容

出现数据包后,点开,再选择Raw,里面的就是请求的具体信息

先访问到列表页,获取所有的详情页链接

请求头

headers = {

'Host': 'mp.weixin.qq.com',

'Connection': 'keep-alive',

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/81.0.4044.138 Safari/537.36 NetType/WIFI MicroMessenger/7.0.20.1781(0x6700143B) WindowsWechat(0x63090016)',

'X-Requested-With': 'XMLHttpRequest',

'Accept': '*/*',

'Cookie': 'wxuin=2408215323; lang=zh_CN; devicetype=android-29; version=28002037; pass_ticket=f85UL5Wi11mqpsvuWgLUECYkDoL2apJ045mJw9lzhCjUteAxd4jM8PtaJCM0nBXrQEGU9D7ulLGrXpSummoA==; wap_sid2=CJvmqfwIEooBeV9IR29XUTB2eERtakNSbzVvSkhaRHdMak9UMS1MRmg4TGlaMjhjbTkwcks1Q2E2bWZ1cndhUmdITUZUZ0pwU2VJcU51ZWRDLWpZbml2VkF5WkhaU0NNaDQyQ1RDVS1GZ05mellFR0R5UVY2X215bXZhUUV0NVlJMVRPbXFfZGQ1ZnVvMFNBQUF+MPz0/50GOA1AlU4=',

'Sec-Fetch-Site': 'same-origin',

'Sec-Fetch-Mode': 'cors',

'Sec-Fetch-Dest': 'empty',

'Referer': 'https://mp.weixin.qq.com/mp/profile_ext?action=home&__biz=Mzg3Nzc2OTQzOA==&uin=MjQwODIxNTMyMw%3D%3D&key=2ed1dc903dceac3d9a380beec8d46a84995a555d7c7eb7b793a3cc4c0d32bc588e1b6df9da9fa1a258cb0db4251dd36eda6029ad4831c4d57f6033928bb9c64c12b8e759cf0649f65e4ef30753ff3092a2a4146a008df311c110d0b6f867ab173792368baa9aaf28a514230946431480cc6b171071a9f9a1cd52f7c07a751925&devicetype=Windows+10+x64&version=63090016&lang=zh_CN&a8scene=7&session_us=gh_676b5a39fe6e&acctmode=0&pass_ticket=f85UL5Wi11%2BmqpsvuW%2BgLUECYkDoL2apJ045mJw9lzhCjUteAxd4jM8PtaJCM0nBXrQEGU9D7ulLGrXpSummoA%3D%3D&wx_header=1&fontgear=2',

'Accept-Encoding': 'gzip, deflate, br',

'Accept-Language': 'zh-CN,zh;q=0.9,en-US;q=0.8,en;q=0.7',

}

发送请求

url = f'https://mp.weixin.qq.com/mp/profile_ext?action=getmsg&__biz=Mzg3Nzc2OTQzOA==&f=json&offset=10&count=10&is_ok=1&scene=&uin=MjQwODIxNTMyMw%3D%3D&key=3e8646dd303f109219f39517773e368d92e1975e6972ccf5d1479758d37ecec3e55bc3cb1bb5606d79ec76073ab58e4019ee720c31c2b36fafa9fe891e7afb1e22809e5db3cd8890ab35a570ffb680d16617ac3049d6627e61ffdf3305e4575666e30ad80a57b14555aa6c5a3a0fb0001a6d5d2cd76fd8af116a086ce9ef2c8e&pass_ticket=f85UL5Wi11%2BmqpsvuW%2BgLUECYkDoL2apJ045mJw9lzjmzvDbqI6V6Y%2FkXeYCZ7WsuMSqko7EWesSKLrDKnJ96A%3D%3D&wxtoken=&appmsg_token=1200_VUCOfHI2jYSEziPbaYFlHoaB7977BJYsAb5cvQ~~&x5=0&f=json'

response = requests.get(url=url, headers=headers, verify=False)

解析

general_msg = response.json()['general_msg_list']

general_msg_list = json.loads(general_msg)

for general in general_msg_list['list']:

content_url = general['app_msg_ext_info']['content_url']

print(content_url)

再访问所有详情页链接,获取需要的图片内容

发送请求

html_data = requests.get(url=content_url, headers=headers, verify=False).text

** 解析数据**

img_list = re.findall('<img class=".*?data-src="(.*?)"', html_data)

print(img_list)

保存数据

for img in img_list:

img_data = requests.get(url=img, verify=False).content

open(f'img/{index}.jpg', mode='wb').write(img_data)

index += 1