pandas +re获取pubmed中文献的标题和摘要

pubmed 网站 https://pubmed.ncbi.nlm.nih.gov/

后面加上文献的pmid号就可以查询到该文献的详情页

爬虫逻辑就是,requests获取网页源代码,正则表达式进行提取,得到我们需要的内容就好了

import requests

import re

key=input("请输入你想查找的信息:") #即你在pubmed查找的关键词,推荐看一下pubmed能不能查询到

local_url=input("请输入你想存储的位置及名称:") #是位置加名称哈

turl="https://pubmed.ncbi.nlm.nih.gov/"

tdata=requests.get(turl,params={"term":key}).text

pat_allpage='<span class="total-pages">(.*?)</span>'

allpage=re.compile(pat_allpage,re.S).findall(tdata)

num=input("请输入大致想获取的文章数目(总数为"+str(int(allpage[0].replace('\n ','').replace(',',''))*10)+"):")

for j in range(0,int(num)//10+1):

url="https://pubmed.ncbi.nlm.nih.gov/"+"?term="+key+"&page="+str(j+1)

data=requests.get(url,params={"term":key}).text

pat1_content_url='<div class="docsum-wrap">.*?<.*?href="(.*?)".*?</a>'

content_url=re.compile(pat1_content_url,re.S).findall(data)

hd={'User-Agent':'Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:76.0) Gecko/20100101 Firefox/76.0','User-Agent':'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.25 Safari/537.36 Core/1.70.3741.400 QQBrowser/10.5.3863.400'}

for i in range(0,len(content_url)):

curl="https://pubmed.ncbi.nlm.nih.gov/"+content_url[i]

try:

cdata=requests.get(curl,headers=hd).text

pat2_title="<title>(.*?)</title>"

pat3_content='<div class="abstract-content selected".*?>(.*?)</div>'

pat4_date='<span class="cit">(.*?)</span>'

title=re.compile(pat2_title,re.S).findall(cdata)

print("正则爬取的题目是:"+title[0])

content=re.compile(pat3_content,re.S).findall(cdata)

date=re.compile(pat4_date,re.S).findall(cdata)

fh=open(local_url+".html","a",encoding="utf-8")

fh.write(str(title[0])+' ----'+str(date[0])+"<br />"+str(content[0])+"<br /><br />")

fh.close

except Exception as err:

pass

if int(num) < 10:

if i+1 == int(num):

break

elif int(num) == 10:

if i == 9:

break

elif (j*10)+i+1 == int(num):

break

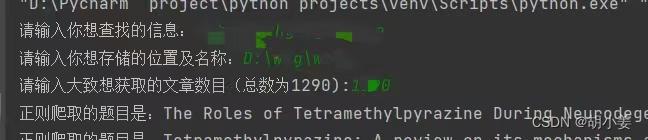

输入你要查找的信息,存储的位置及名称,获取的数目

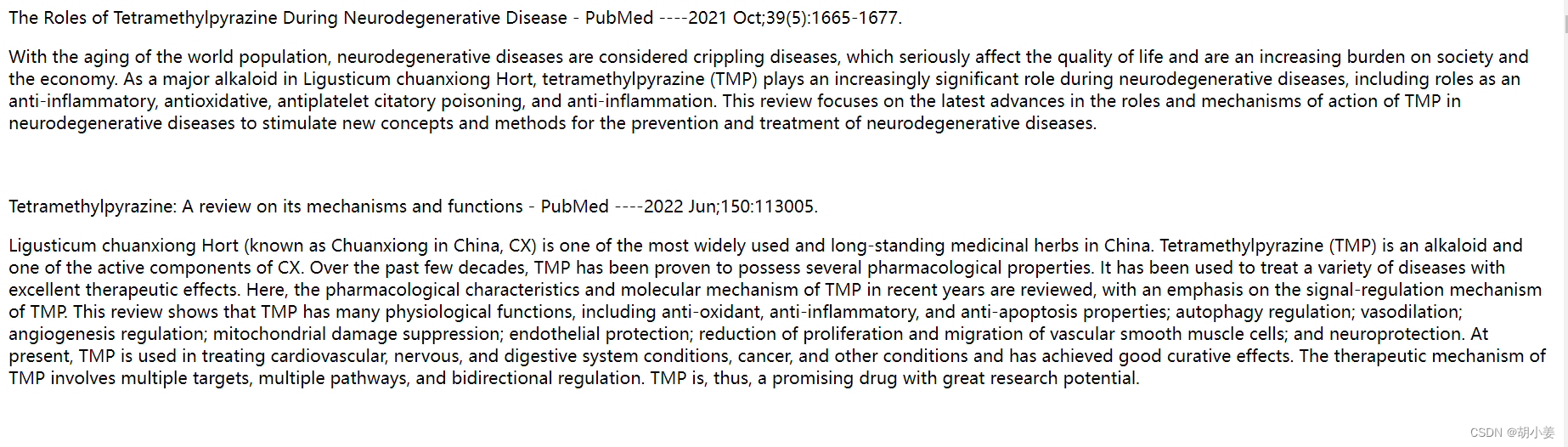

就会得到如下的结果,edge打开后,右上角的一键翻译可以快速浏览出需要的文献

值得一提的是,这样子获取到的是html文件是为了我们方便删选,所以还可以同时运行一下下面的py程序,可以获取csv文件,更好的进行汇报以及整理

与上面的相比,就是把读取到的数据,str后转成list再写成了DataFrame格式,之后整合在一起,保存到csv文件里面

import pandas as pd

import requests

import re

key=input("请输入你想查找的信息:")

# local_url=input("请输入你想存储的位置及名称:") D:\pubmed\abst.html

turl="https://pubmed.ncbi.nlm.nih.gov/"

tdata=requests.get(turl,params={"term":key}).text

pat_allpage='<span class="total-pages">(.*?)</span>'

allpage=re.compile(pat_allpage,re.S).findall(tdata)

num=input("请输入大致想获取的文章数目(总数为"+str(int(allpage[0].replace('\n ','').replace(',',''))*10)+"):")

df = pd.DataFrame()

for j in range(0,int(num)//10+1):

url="https://pubmed.ncbi.nlm.nih.gov/"+"?term="+key+"&page="+str(j+1)

data=requests.get(url,params={"term":key}).text

pat1_content_url='<div class="docsum-wrap">.*?<.*?href="(.*?)".*?</a>'

content_url=re.compile(pat1_content_url,re.S).findall(data)

hd={'User-Agent':'Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:76.0) Gecko/20100101 Firefox/76.0','User-Agent':'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.25 Safari/537.36 Core/1.70.3741.400 QQBrowser/10.5.3863.400'}

for i in range(0,len(content_url)):

curl="https://pubmed.ncbi.nlm.nih.gov/"+content_url[i]

try:

cdata=requests.get(curl,headers=hd).text

pat2_title="<title>(.*?)</title>"

pat3_content='<div class="abstract-content selected".*?>(.*?)</div>'

pat4_date='<span class="cit">(.*?)</span>'

title=re.compile(pat2_title,re.S).findall(cdata)

print("正则爬取的题目是:"+title[0])

content=re.compile(pat3_content,re.S).findall(cdata)

date=re.compile(pat4_date,re.S).findall(cdata)

title1 = title[0]

title2 = str(title1)

title3 = list(title2.split(' ', 0))

df1 = pd.DataFrame(title3)

date1 = date[0]

date2 = str(date1)

date3 = list(date2.split(' ', 0))

df2 = pd.DataFrame(date3)

print(df2)

content1 = content[0]

content2 = str(content1)

content3 = list(content2.split(' ', 0))

df3 = pd.DataFrame(list(content2))

df4 = pd.concat([df1, df2, df3])

# df5= pd.concat([df4, df3])

df6 = pd.concat([df, df4])

print(df6)

df6.to_csv(f"123.csv", index=False, mode='a', encoding='utf_8_sig') #123改成你自己的文件名就好了,这个就是保存到了当前文件夹里面

# fh = open(local_url+".html","a",encoding="utf-8")

# fh.write(str(title[0])+' ----'+str(date[0])+"<br />"+str(content[0])+"<br /><br />")

# fh.close

except Exception as err:

pass

if int(num) < 10:

if i+1 == int(num):

break

elif int(num) == 10:

if i == 9:

break

elif (j*10)+i+1 == int(num):

break初学者小白一名,学到了前辈的经验,进行了自己的一部分总结和乱改,希望对读者有所帮助